For the last 15 years or so, I’ve been using IMDb to look up how good movies are supposed to be. I also try to do my bit by submitting ratings for those I’ve watched. So far, I’ve rated nearly 700 films. In recent years, I’ve noticed more and more people referring to Rotten Tomatoes as their trusted source. Also, IMDb has started listing Metacritic’s score alongside their own, and Google can show all three in their search results for a film. Naturally, I had to go and look at the data to figure out which site I should be using.

To make sense of my analysis, it will be helpful for you to know how I think of my scores (on a 10-point scale):

- 5 or lower: Not enjoyable/a waste of time.

- 6: Just about watchable, but not really worth it.

- 7: OK – fine to watch if you fancy the particular film, but also fine to miss.

- 8: Good – worth the time.

- 9: Excellent – as good as most films can hope to get.

- 10: One of my few all-time favourites. The films I would take with me on a desert island.

Most of the time, I try to watch films that I hope to be able to score 8 or higher. For more indulgent choices like romantic comedies, a prospective 7 will do.

The data set

First, I took all the films I’ve rated 10 – there are only ten of those. Then I looked up my recent ratings of 6, 7, 8 and 9, and took ten films for each of those. Lastly, I included all the films I’d rated 5 or lower – there were only eight of those.

For those 58 films, I looked up the IMDb average rating, Metacritic’s Metascore, and the six different numbers Rotten Tomatoes publishes: the percentage of favourable reviews by all approved critics and by top critics (these two are called the Tomatometer, and the first is the main number they promote), the percentage of favourable audience ratings (the “audience score”), as well as the average rating given by each of those three groups.

The analysis

I created scatter plots to see how each of the eight numbers correlates with my own rating. The different scores use different scales and have different ranges of typical values. For example, the Metascores ranged from 18 to 94; the IMDb ones from 4.4 to 9.0. To make sure they’re all visually comparable, I made the vertical axis of each graph cover the full range of scores. This way, they all have a similar steepness and level of detail. The dots are transparent black, so the darker areas of the graphs are where multiple dots overlap.

First, here’s how IMDb’s rating compares to my own scores:

You can see that films I rated more highly tend to have a higher average rating on IMDb. If you look more closely, you can also see that almost all films I scored 10 have higher IMDb ratings than most I scored 9, and those in turn have higher ratings than most I scored 8. Another thing to note is that all my 9s and 10s are deemed better by IMDb than those I rated 6 or lower.

Next, here’s how Metacritic and Rotten Tomatoes’ main rating relate to my scores, alongside IMDb:

On Metacritic and Rotten Tomatoes, the range of scores for films I rated at least 7 is noticeably higher than for those I rated 6 or lower – the distinction is actually remarkably pronounced with the Tomatometer. However, they say nothing about whether something will be a 7 (just about worth watching) or a 10 (all-time favourite). It’s also disappointing that they have some of my 10s overlapping with my 6s, and even a 5 in the case of Metacritic. Sticking to high ratings on these sites would have caused me to miss some of my most cherished film experiences, while still wasting my time on some occasions.

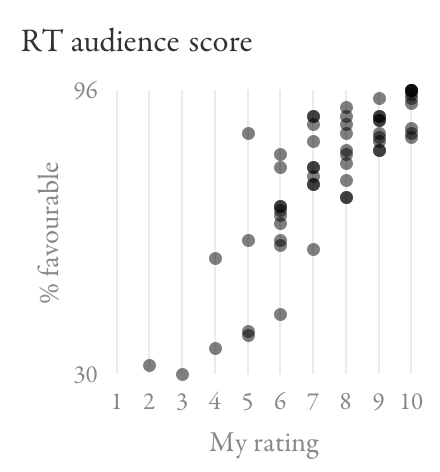

Let’s look at the other metrics Rotten Tomatoes publishes. Here’s how the percentage of favourable reviews from top critics and from the audience relate to my ratings, compared to all critics:

The top-critics Tomatometer seems like a worse predictor of my scores than the general one is. The audience score shows some correlation with my scores, although not as strongly as IMDb did; there are still a lot of films I rated 7–10 where it gives the same range of scores.

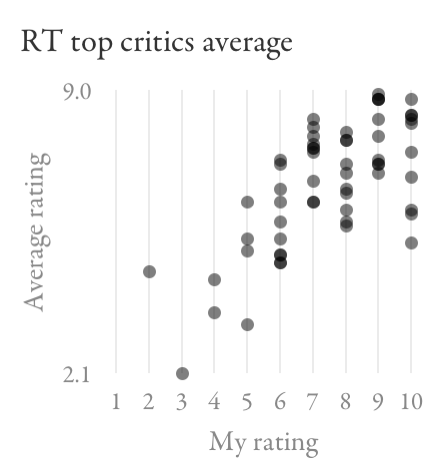

Finally, here are the average ratings from those three groups of people on Rotten Tomatoes (as opposed to the percentage of favourable reviews):

Again, there’s a lot of overlap between the scores for films I rated between 6 and 10, so these metrics wouldn’t do a good job helping me decide what to watch.

Making decisions

For me, IMDb looks like the most promising signal overall, especially for distinguishing among the top end of films. The data tells me that if something is rated at least about 7.8, I probably shouldn’t miss it and am unlikely to be disappointed:

One tricky distinction is between 7 (OK) and 8 (good), but IMDb does no worse here than the other sites.

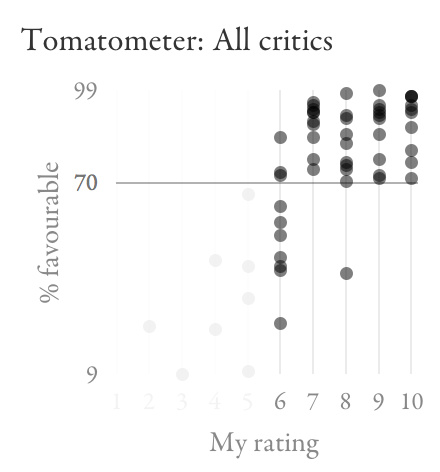

The biggest challenge is avoiding things that aren’t really worth watching (6 and below). On IMDb, there’s quite significant overlap between my 6s and 7s. The Tomatometer looks like it might do a better job of keeping those sets of films apart:

In this set of films, an IMDb score between 6.6 and 7.3 (that’s a lot of films in general) won’t help me decide if something’s worth watching. The Tomatometer, on the other hand, seems to have a magic threshold at 70%.

Based on all of this, I’m going to adopt the following strategy: check IMDb to see how much I’m likely to enjoy a film; if I’m interested in one that looks borderline (around 7.0), check Rotten Tomatoes to see if it scores more than 70%. I recently tried this for A Most Wanted Man (IMDb: 6.8, Tomatometer: 88%), with success.

What’s going on with the Tomatometer?

I’ve been puzzling over why Rotten Tomatoes seems so remarkably good at identifying films worth watching, while being so remarkably poor at telling me just how good they are. Above what I call a 6, there’s just no correlation at all.

Recall that the Tomatometer doesn’t tell you an average rating like IMDb does, but rather what percentage of critics’ reviews were favourable. Whether a review is favourable is apparently determined by Rotten Tomatoes staff looking at its content, at least for reviews that have no numeric score or when the score is close to the middle.

Using this binary signal means that a lukewarm review will count the same as a stellar one. In essence, the Tomatometer only tells you what portion of critics thought a film was at least fine. That number might be the same for a film people tend to think is just OK (e.g. Wanted – IMDb: 6.7, Rotten Tomatoes: 72%) and for one people tend to love (e.g. Léon – IMDb: 8.6, Rotten Tomatoes: 71%), so the Tomatometer won’t be able to tell these apart. (In fact, in a hypothetical world where everyone agrees how much they like a film, the Tomatometer would only ever show 0% or 100%: its granular 100-point scale relies on people being in disagreement.) Depending on how much this actually happens, this could explain at least in part why the Tomatometer doesn’t tell me how good a film is as long as it’s good enough.

Final thoughts

Here are some ways one might be tempted to interpret my findings:

- IMDb is the best movie rating.

- IMDb is the best movie rating for me.

- IMDb represents a sub-population that reflects my taste better than other communities do.

- By seeing the IMDb rating before watching a film, I’ve been predisposed to agreeing with the average, through a kind of confirmation bias or peer pressure.

- The crowd is wiser than the critics.

- I should be a critic.

- My taste in films is really average.

The first conclusion would obviously be the most useful to you as a reader. However, it’s possible that different sites work well for different people, in which case you’d need to test them against your own ratings. I don’t necessarily recommend repeating what I did (it was a very lengthy, manual process). But simply looking up the different ratings for some of the films you’ve seen, or just paying attention to them in the future, should give you a feel for whether one of them works better than the others.

No comments:

Post a Comment